Table of Contents

The noindex tag serves an essential purpose: it prevents search engines from including a specific page in their index, thus ensuring it doesn’t appear in search results. Having all pages on your website indexed is only sometimes necessary or desirable.

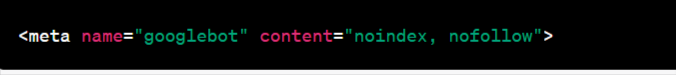

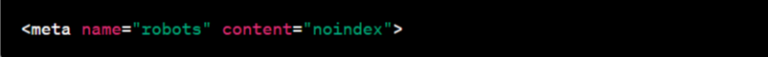

By incorporating a HTML noindex directive within a robots meta tag, it is possible to instruct search engines not to index a page. Simply include the provided code within the <head> section of the HTML.

The noindex tag can be inserted as an x-robots-tag within an HTTP header in an alternate approach.

If a webpage contains the “noindex” tag, search engine bots like Googlebot skip indexing it. Google will eliminate the page from search results in cases where it had been indexed before implementing the tag, regardless of any inbound links from other sites.

Although meta directives do not bind search engine crawlers, they usually consider them suggestions rather than strict instructions. It’s worth noting that different search engine crawlers, including Googlebot, may interpret the robots meta values differently.

Noindex vs. Nofollow:

Typically, people use the “noindex” tag to prevent search engines from indexing a specific web page. When a page includes the “noindex” directive, search engines understand that they should not have the page in their search results. This serves various purposes, such as avoiding duplicate content issues, hiding sensitive information, or excluding pages that lack relevance for search engine users.

Applying the “noindex” tag to a page tells search engines, “Please do not display this page in search results.” It’s important to note that search engines may still crawl the page to understand its content and gather data, but they won’t include it in their index.

Nofollow:

On the other hand, the “nofollow” tag is used to instruct search engines not to follow specific links on a page. When a search engine crawler encounters a “nofollow” attribute on a link, it understands that it should not pass on any ranking authority or “link juice” to the linked page. This means that the linked page won’t benefit from the SEO value of the linking page.

Initially introduced to combat spam and control the flow of link equity on the web, the “nofollow” attribute allows website owners to prevent search engines from factoring certain links into calculating ranking and authority for the linked pages. This implementation helps avoid link misuse, particularly in user-generated content or advertising contexts.

It’s important to note that the “nofollow” attribute does not entirely block search engine crawlers from accessing the linked page. They can still follow the link and crawl the content, but the link itself won’t contribute to the linked page’s ranking signals.

In what situations is it appropriate to utilize the noindex tag?

The noindex meta tag is a directive that you can use in the HTML code of a webpage to instruct search engine bots not to index and display that page in search engine results. It is essential to consider using the noindex tag in specific scenarios to optimize your website’s visibility and ranking potential.

Here are some situations where implementing the noindex tag can be advantageous:

Duplicate content:

When you have multiple pages on your website with duplicate content, using the noindex tag on the duplicate versions is recommended. This helps prevent search engines from indexing and potentially penalizing your site for having the same content, which can negatively impact your rankings.

Temporary pages:

Suppose you have temporary pages on your site that are not meant to be indexed by search engines, such as landing pages for limited-time promotions or event-specific content. In that case, it is advisable to use the noindex tag. This ensures that these pages do not appear in search results once the promotion or event is over.

Ready to Chat About

Mastering the Noindex Tag: A Definitive Guide with Effective Strategies

Drop us a line today!

Private or confidential content:

When you have pages or sections of your website that contain sensitive or confidential information, such as internal documents or members-only areas, it is crucial to use the noindex tag. This prevents unauthorized users from accessing and finding these pages through search engines.

Non-essential pages:

Some pages on your website may need to be more crucial to your overall SEO strategy or user experience. These could include thank you pages after form submissions, printer-friendly versions of content, or pages with minimal value. Implementing the noindex tag on such pages helps search engines focus on indexing and ranking your more critical content.

Faceted navigation and search result pages:

If your website has faceted navigation or generates numerous search result pages with different filters or sorting options, using the noindex tag on these pages is recommended. This helps prevent search engines from indexing an excessive number of similar pages, which could dilute your website’s overall ranking potential.

You can enhance your website’s visibility and ranking potential by strategically using the noindex tag in the appropriate scenarios mentioned above. However, it is essential to use this tag judiciously and avoid applying it to critical pages that you want indexed and ranked in search engine results. Regularly reviewing your website’s content and implementing the noindex tag where necessary can contribute to an effective SEO approach.

What are the steps for applying the noindex tag on a webpage?

Implementing the noindex tag is a way to instruct search engines not to index a specific web page. This can be useful when you have content you want to avoid appearing in search engine results.

Here are the steps to implement the robots no index tag manually in your HTML code:

- Open the HTML file of the page to which you want to apply the no index tag in a text editor or an HTML editor.

- Locate the <head> section of the HTML code. This is usually found near the top of the file.

- Within the <head> section, add the following meta tag:

This meta tag tells search engines not to index the page.

Save the changes to your HTML file.

The process may differ if you use a content management system (CMS) like WordPress, Joomla, or Drupal. Here’s a general overview of how to implement the noindex tag in popular CMS:

- WordPress:

- Log in to your WordPress dashboard.

- Navigate to the page or post to which you want to apply the noindex tag.

- Look for the “SEO” or “Yoast SEO” section on the page editor screen.

- In the meta box, find the option to set the page as “noindex” or “do not index.”

- Save or update the page to apply the changes.

- Joomla:

- Log in to your Joomla administration panel.

- Go to the “Menus” or “Content” section and select the page you want to apply the noindex tag to.

- Look for the “Metadata Options” or “Robots” field.

- Set the value to “noindex” or “noindex, follow” to prevent indexing.

- Save the changes to apply the noindex tag.

- Drupal:

- Log in to your Drupal administration panel.

- Go to the page you want to apply the noindex tag to.

- Look for the “Meta tags” or “Page title and meta tags” section.

- Find the option to set the page as “noindex” or “do not index.”

- Save the changes to implement the noindex tag.

Remember to review the specific documentation or settings of your CMS for more accurate instructions, as the steps may vary depending on the version and plugins you’re using.

It’s essential to test the implementation of the noindex tag to ensure it’s working correctly. You can use tools like Google Search Console or other SEO analysis tools to verify if the page is being correctly identified as noindex by search engines.

Pros and Cons of Robots Meta Tags:

Robots meta tags are a powerful tool for controlling how search engines index and display content on a website. While the “noindex” tag is a commonly used robots meta tag, other variations can also impact search engine indexing. Here are the pros and cons of using robots meta tags:

Pros:

Control over indexing:

Robots meta tags control whether search engines should index a specific webpage. This is particularly useful when you have certain pages to avoid appearing in search engine results pages (SERPs), such as duplicate content, private or sensitive information, or temporary pages.

Managing crawl budget:

Search engines allocate a limited amount of resources for crawling websites. Using robots meta tags, you can instruct search engines to prioritize crawling and indexing important pages while excluding less significant or low-value pages. This helps ensure that search engines focus on crawling and indexing your website’s most relevant and valuable content.

Directing search engine bots:

Robots meta tags allow you to guide search engine bots on how to interact with your website. For example, you can use the “nofollow” tag to instruct search engines not to follow specific links on a page. This can be beneficial when you want to prevent search engines from following certain links that might lead to low-quality or irrelevant content.

Controlling snippet display:

Some robots’ meta tags, such as “nosnippet” and “max-snippet,” allow you to control the content that appears in search engine snippets. This can be useful for optimizing how your website is represented in search results, ensuring that the displayed snippet accurately reflects the page’s content.

Cons:

Potential for misconfiguration:

Misconfiguring robots’ meta tags can have unintended consequences. If you mistakenly apply a “noindex” tag to a significant page, it could result in search engines excluding that page from their index, causing it to disappear from search results. Careful attention and testing are necessary to avoid accidental misconfigurations.

Dependency on search engine support:

The effectiveness of robots meta tags depends on search engines correctly interpreting and implementing them. While major search engines like Google, Bing, and Yahoo! generally support robots meta tags, variations in their interpretation or the behavior of smaller or less popular search engines are possible.

Limited impact on other search engine activities:

Robots meta tags primarily affect indexing and crawling behaviors. They do not directly influence other search engine optimization (SEO) aspects, such as improving page ranking or attracting organic traffic. Additional SEO techniques and strategies are required for these purposes.

Over Reliance on robots meta tags: Relying solely on robots meta tags to manage indexing and crawling can limit the potential of your website. Other factors, such as the overall site structure, internal linking, and XML sitemaps, are crucial in search engine discovery and indexing. Using a comprehensive approach to SEO is essential for maximizing the visibility and performance of your website.

Understanding the pros and cons of robots meta tags allows you to make informed decisions when implementing them. It’s essential to consider your website’s needs and goals carefully and use robots meta tags in conjunction with other SEO techniques to achieve the best possible results.

The Benefits and Drawbacks of Using HTTP Headers.

An alternative to HTML-based meta tags is utilizing HTTP headers to communicate with search engines. This section will explore the advantages and disadvantages of leveraging HTTP headers to control search engine indexing. We’ll discuss the implications of using X-Robots-Tag and other relevant headers.

Benefits

Simplicity and Efficiency:

Using HTTP headers is a straightforward and efficient communication method with search engines. It requires minimal effort and can be quickly applied across multiple pages or entire websites.

Flexibility:

HTTP headers provide greater flexibility compared to HTML meta tags. They allow for more fine-grained control over various aspects of indexing, such as specifying the desired behavior for different types of content (e.g., images, videos, documents) or individual pages.

Centralized Control:

Using HTTP headers, control over indexing directives can be centralized at the server level. This means you can implement the changes to indexing instructions globally without modifying each page individually. It simplifies the management process, particularly for larger websites with numerous pages.

More vigorous Enforcement:

HTTP headers, such as the X-Robots-Tag header, can be used to enforce indexing instructions more effectively. When search engine crawlers encounter these headers, they are more likely to adhere to the specified directives than HTML meta tags, which can be ignored or overridden by specific search engines.

Drawbacks

Technical Expertise Required:

Implementing and managing HTTP headers to control search engine indexing may require technical Expertise. It involves configuring server settings or using specific content management systems (CMS) that support header customization. This barrier to entry can be challenging for individuals without sufficient technical knowledge.

Limited Browser Support:

While most modern web browsers support HTTP headers, there may still be some older or less commonly used browsers that still need to recognize or interpret these headers fully. Consequently, there is a possibility that all user agents may need to understand specific indexing directives correctly.

Lack of Standardization:

While some HTTP headers, like the X-Robots-Tag, are widely recognized and supported, there needs to be more standardization across all titles related to search engine indexing. Different search engines may interpret headers differently, leading to consistency in applying indexing directives.

Difficulty in Debugging:

Unlike HTML meta tags, which you can quickly inspect within the page source, HTTP headers are not directly visible to users or easily viewable in web browsers. Debugging header-related issues may require specialized tools or server access, making it more challenging for website owners or developers to troubleshoot problems.

Considering these pros and cons is essential when deciding whether to utilize HTTP headers for controlling search engine indexing. The choice should be based on factors such as the technical capabilities of the website, the desired level of control, and the resources available for implementation and maintenance.

What methods can you use to verify the correct implementation of the noindex tag?

Verifying the effectiveness of your implementation of the noindex tag is essential to ensure that search engines correctly recognize and honor the tag. There are several tools and techniques available to help you in this process.

1. Google Search Console:

Google Search Console is a valuable tool for monitoring and managing your website’s presence in Google search results. It provides various features to check the indexing status of your pages. After adding your website to Google Search Console, you can use the “URL Inspection” tool to test individual pages and see how Googlebot crawls and indexes them. It will also demonstrate whether the system correctly detects the noindex tag.

2. Robots.txt Tester:

The robots.txt file communicates with search engine crawlers about which pages or directories to crawl or exclude. You can use the “Robots.txt Tester” tool in Google Search Console to ensure that the pages you want excluded from indexing are properly specified in the robots.txt file. This tool will help you identify any issues with the file that might affect the recognition of the noindex tag.

3. Manual Checks:

You can perform manual checks to verify the implementation of the noindex tag on specific pages. Open the page in a web browser and view the source code (usually accessible by right-clicking and selecting “View Page Source” or similar options). Look for the meta robots tag, including the “noindex” directive. Additionally, you can inspect the page using browser developer tools (usually accessible by right-clicking and selecting “Inspect” or similar options) to check if the meta robots tag is correctly in the HTML code.

4. Search Engine Results Pages (SERPs):

Another way to verify the effectiveness of the noindex tag is by checking search engine results pages. Perform a search engine search using specific keywords related to the page you want to exclude. If the page does not appear in the search results, the search engine has recognized and honored the noindex tag.

5. Third-Party SEO Tools:

Various third-party SEO tools can help you analyze the indexing status of your website and individual pages. These tools can offer insights into how search engines index your content and whether they correctly implement the noindex tag.

By combining these tools and techniques, you can ensure that your implementation of the noindex tag is effective and that search engines are correctly recognizing and honoring the title, thereby preventing unwanted pages from being indexed.

A Comparison of Noindex Tags, Robots.txt Files, and Canonical Tags.

When it comes to controlling the indexing of specific website pages at the page level, noindex tags play a crucial role. Let’s now explore the distinctions between noindex tags and robots.txt files, as well as canonical tags.

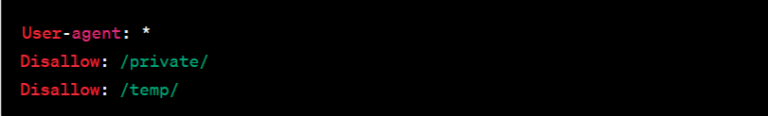

Robots.txt Files:

A robots.txt file is a plain text file located in the root directory of a website that provides instructions to search engine crawlers on which pages they should or should not crawl. The robots.txt file uses a specific syntax and contains directives controlling search engine bots’ behaviour. Here’s an example of a robots.txt file:

Use cases for robots.txt files:

- Blocking specific directories:

If you have directories or sections of your website that you want to prevent search engines from crawling, you can use robots.txt directives to disallow those directories.

- Crawling budget optimization:

By blocking unimportant or low-value pages, you can ensure that search engine crawlers focus on indexing your most valuable content.

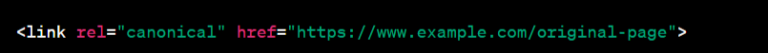

Canonical Tags:

Canonical tags are HTML tags that specify the preferred version of a web page when multiple versions with similar content exist. They help search engines understand a page’s canonical (or original) version to avoid duplicate content issues. Canonical tags are placed in the <head> section of a web page and use the following syntax:

Use cases for canonical tags:

- Duplicate content across multiple URLs:

If the same content is accessible through different URLs (e.g., through different pagination or sorting options), you can use canonical tags to indicate the preferred version to search engines.

- Syndicated content:

You can use canonical tags if you distribute your content on other websites and want to attribute the original content to your website.

In summary, here’s a brief comparison of these techniques:

Noindex tags are used to prevent specific web pages from being indexed.

Robots.txt files instruct search engine crawlers on which pages or directories they should or should not crawl.

Canonical tags specify the preferred version of a web page when duplicate content exists across multiple URLs.

By utilizing these techniques appropriately, you can effectively manage how search engines index your web pages and improve your overall SEO performance.

Wrapping Up

Understanding and utilizing the noindex tag, robots.txt files, and canonical tags are crucial for controlling search engine indexing. The noindex tag prevents specific pages from appearing in search results, while robots.txt files guide crawlers on which pages to crawl. Canonical tags specify preferred versions of pages to avoid duplicate content. It’s essential to implement these techniques carefully and verify their effectiveness. By doing so, you can enhance your website’s visibility and ranking potential in search engine results.

About the Author

My name’s Semil Shah, and I pride myself on being the last digital marketer that you’ll ever need. Having worked internationally across agile and disruptive teams from San Fransico to London, I can help you take what you are doing in digital to a whole next level.